Return to Paolo Strianese's personal page

Return to : Agricoltural Robotics

Slides of the final presentation: Click Here

Suckers and Watersprouts in Viticulture

In viticulture, suckers and watersprouts are two types of unwanted shoots. They compete with the main plant for essential resources. Suckers grow from the base or collar of the grapevine trunk. Watersprouts, instead, appear higher up, usually from woody arms or branches.

Both types are non-productive. Removing them helps maintain vine health and improve yield. However, their locations are different, and each needs a specific management strategy. For automated pruning and sucker removal systems, it is important to tell them apart.

The Challenge of Automatic Sucker Detection

Automatic sucker detection in vineyards using computer vision is challenging due to the visual similarity between suckers, watersprouts, and other vine components or the background. If the AI model pays too much attention to irrelevant areas, it can misclassify objects and increase false positives.

To boost the accuracy of our semantic segmentation model for vineyards, we implemented an advanced preprocessing technique that blurs all unlabelled regions in training images, keeping only annotated areas sharp.

During our experiments, we tested several different approaches:

-

Training with image sizes of 512×512 and 640×640

-

Using both YOLOv11n-seg and YOLOv11s-seg model architectures

-

Evaluating models without data augmentation, with online augmentation, and with offline augmentation

The best results were achieved with the combination of Gaussian blur preprocessing, 640×640 image resolution, the YOLOv11s-seg model, and offline data augmentation. This setup was particularly effective given our limited number of training images, as the offline augmentation significantly increased the dataset size and variety.

Here’s how the final preprocessing works:

-

Create a binary mask that includes all annotated regions (such as suckers, watersprouts, trunk, etc.).

-

Apply a Gaussian blur (kernel 13×13, σ=3) to the entire image.

-

Combine the blurred and original images so only annotated regions remain sharp, while the rest is visually penalized.

This technique helps the model focus on relevant objects, improving its ability to distinguish between suckers, watersprouts, and the background, without losing overall scene context.

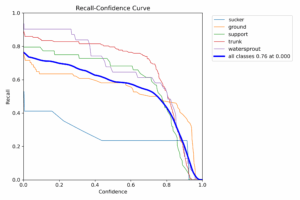

Recall-Confidence Curve

The recall-confidence curve shows that recall for trunk, ground, and support remains high, even with strict confidence thresholds. Sucker detection, which is particularly challenging, also shows clear improvement with this preprocessing method.

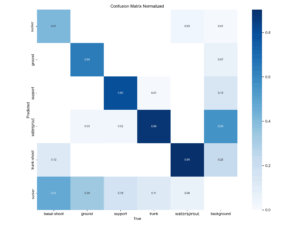

Normalized Confusion Matrix

The normalized confusion matrix highlights a significant reduction in confusion between suckers and the background. Classes such as trunk and watersprout show high accuracy, demonstrating that the model has learned to distinguish between different vine components more effectively.

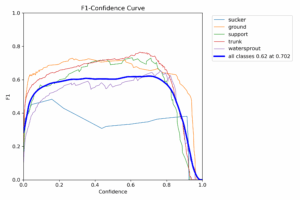

F1-Confidence Curve

The F1-confidence curve reflects a solid balance between precision and recall. The average F1-score across all classes is 0.62, with trunk and ground achieving the highest values, indicating robust overall performance.

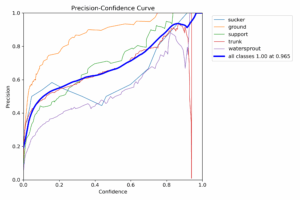

Precision-Confidence Curve

The precision-confidence curve shows that precision increases with higher confidence thresholds, especially for trunk and ground, which approach values of 1.00. Sucker and support classes also benefit from the blurring strategy, showing better results compared to experiments without this preprocessing.

By applying background blurring to unlabeled regions, we achieved more robust and accurate sucker and watersprout detection in vineyards. This simple but effective preprocessing step improves semantic segmentation performance, allowing the model to focus on what matters and reducing false positives from the background.

All image annotations for training and evaluation were performed using the Roboflow platform, which streamlined the dataset creation and labeling process.