Return to main Robotic Perception Research page

In this project we present a general framework for urban road layout estimation, altogether with a specific application to the vehicle localization problem. The localization is performed by synergically exploiting data from different sensors, as well as map-matching with cartographic maps.

|

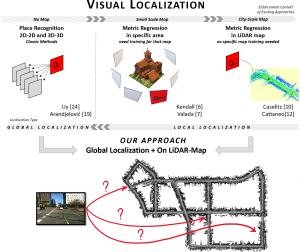

Global visual localization in LiDAR-maps through shared 2D-3D embedding space submitted at IEEE ICRA 2020 Abstract: Global localization is an important and widely studied problem for many robotic applications. Place recognition approaches can be exploited to solve this task, e.g., in the autonomous driving field. While most vision-based approaches match an image w.r.t an image database, global visual localization within LiDAR-maps remains fairly unexplored, even though the path toward high definition 3D maps, produced mainly from LiDARs, is clear. In this work we leverage DNN approaches to create a shared embedding space between images and LiDAR-maps, allowing for image to 3D-LiDAR place recognition. We trained a 2D and a 3D Deep Neural Networks (DNNs) that create embeddings, respectively from images and from point clouds, that are close to each other whether they refer to the same place. An extensive experimental activity is presented to assess the effectiveness of the approach w.r.t. different learning methods, network architectures, and loss functions. All the evaluations have been performed using the Oxford Robotcar Dataset, which encompasses a wide range of weather and light conditions. |

|

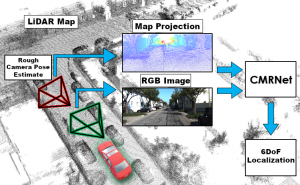

CMRNet: Camera to LiDAR-Map Registration presented at the IEEE ITSC 2019 Conference within the Special Session on Urban Localization Abstract: In this paper we present CMRNet, a realtime approach based on a Convolutional Neural Network (CNN) to localize an RGB image of a scene in a map built from LiDAR data. Our network is not trained on the working area, i.e., CMRNet does not learn the map. Instead it learns to match an image to the map. We validate our approach on the KITTI dataset, processing each frame independently without any tracking procedure. CMRNet achieves 0.26m and 1.05deg median localization accuracy on the sequence 00 of the odometry dataset, starting from a rough pose estimate displaced up to 3.5m and 17deg. To the best of our knowledge this is the first CNN-based approach that learns to match images from a monocular camera to a given, preexisting 3D LiDAR-map. |

|

Vehicle localization using 3D building models and Point Cloud matching work in progress Abstract: Detecting buildings in the surrounding of an urban vehicle and matching them to models available on map services is an emerging trend in robotics localization for urban vehicles. In this paper we present a novel technique that improves a previous work in this area by taking advantage of detected building façade positions and the correspondence with their 3D models available in OpenStreetMap (OSM). The proposed technique uses segmented point clouds produced using stereo images, processed by a Convolutional Neural Network. The point clouds of the fac¸ades are then matched against a reference point cloud, produced extruding the buildings’ outlines, as available on OSM. In order to produce a lane-level localization of the vehicle, the resulting information is then fed into our probabilistic framework, called Road Layout Estimation (RLE). We prove the effectiveness of this proposal testing it on sequences from the well-known KITTI dataset and comparing the results the respect to a basic RLE version without the proposed pipeline. |

|

Visual Localization at Intersections with Digital Maps presented at the IEEE ICRA 2019 Conference Abstract: This paper deals with the task of ego-vehicle localization at intersections, a significant task in autonomous road driving. We propose an online vision-based method that can hence be applied if the intersection is visible. It relies on stereo images and on a coarse street-level pose estimate, used to retrieve intersection data from a digital map service. Pixel-level semantic segmentation, and 3D reconstruction from state-of-the art Deep Neural Networks are coupled with an intersection model; this allows good positioning accuracy with respect to the state-of-the-art in this task. To demonstrate the effectiveness of the method and make it possible to compare it with other methods, an extensive activity has been conducted in order to set up a dataset of approaches to an intersection, which has then been used to benchmark the proposed method. The dataset is made available to the community, and it currently includes more than forty intersection approaches, from KITTI. Another important contribution of the paper is the definition of criteria for the comparison of different methods, on recorded datasets. The proposed method achieves nearly sub-meter accuracy in difficult real conditions. |

|

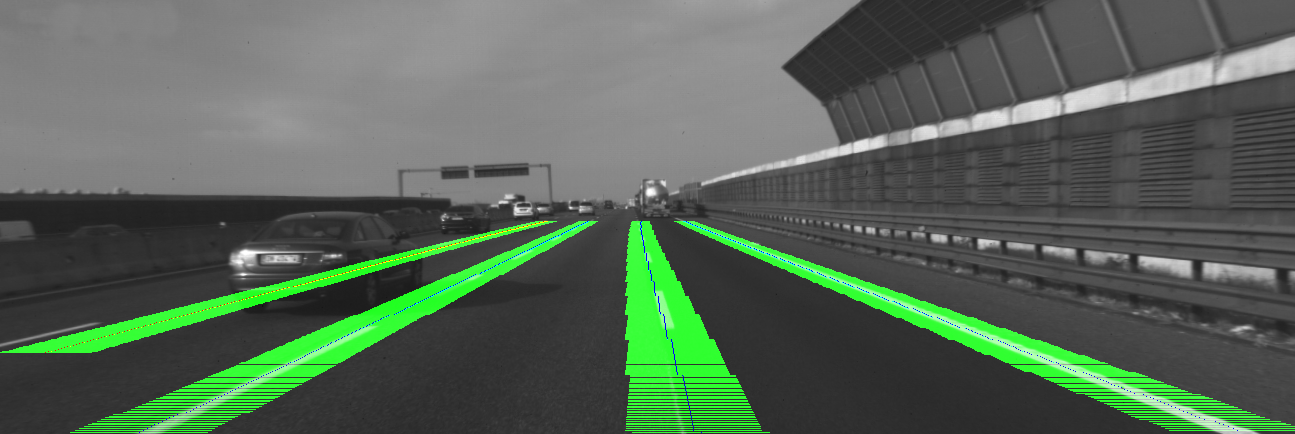

Ego-lane Estimation by Modelling Lanes and Sensor Failures presented at the IEEE ITSC 2017 Conference Abstract: In this paper we present a probabilistic lane-localization algorithm for highway-like scenarios designed to increase the accuracy of the vehicle localization estimate. The contribution relies on a Hidden Markov Model (HMM) with a transient failure model. The idea behind the proposed approach is to exploit the availability of OpenStreetMap road properties in order to reduce the localization uncertainties that would result from relying only on a noisy line detector, by leveraging consecutive, possibly incomplete, observations. The algorithm effectiveness is proven by employing a line detection algorithm and showing we could achieve a much more usable, i.e., stable and reliable, lane-localization over more than 100Km of highway scenarios, recorded both in Italy and Spain. Moreover, as we could not find a suitable dataset for a quantitative comparison of our results with other approaches, we collected datasets and manually annotated the Ground Truth about the vehicle ego-lane. Such datasets are made publicly available for usage from the scientific community. |

|

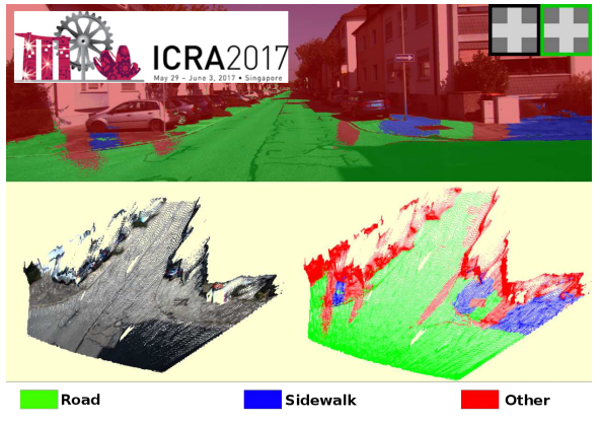

An Online Probabilistic Road Intersection Detector presented at the IEEE ICRA 2017 Conference Abstract: In this paper we propose a probabilistic approach for detecting and classifying urban road intersections from a moving vehicle. The approach is based on images from an onboard stereo rig; it relies on the detection of the road ground plane on one side, and on a pixel-level classification of the road on the other. The two processing pipelines are then integrated and the parameters of the road components, i.e., the intersection geometry, are inferred. As opposed to other state-of-the-art offline methods, which require processing of the whole video sequence, our approach integrates the image data by means of an online procedure. The experiments have been performed on well-known KITTI datasets, allowing for future comparisons. |

|

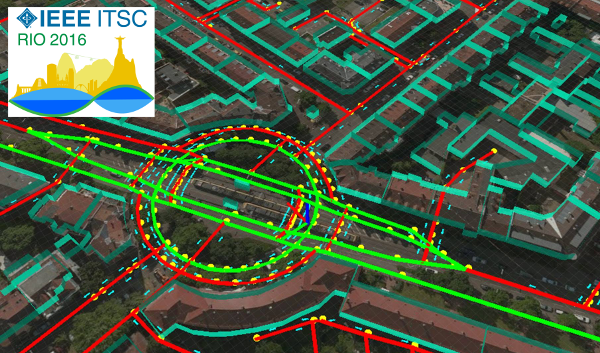

Leveraging the OSM building data to enhance the localization of an urban vehicle presented at the IEEE ITSC 2016 Conference Abstract: In this paper we present a technique that takes advantage of detected building façades and OpenStreetMaps data to improve the localization of an autonomous vehicle driving in an urban scenario. The proposed approach leverages images from a stereo rig mounted on the vehicle to produce a mathematical representation of the buildings' façades within the field of view. This representation is matched against the outlines of the surrounding buildings as they are available on OpenStreetMaps. The information is then fed into our probabilistic framework, called Road Layout Estimation, in order to produce an accurate lane-level localization of the vehicle. The experiments conducted on the well-known KITTI datasets prove the effectiveness of our approach. |

|

A framework for outdoor urban environment estimation presented at the IEEE ITSC 2015 Conference Abstract: In this paper we present a general framework for urban road layout estimation, altogether with a specific application to the vehicle localization problem. The localization is performed by synergically exploiting data from different sensors, as well as map-matching with OpenStreetMap cartographic maps. The effectiveness is proven by achieving real-time com- putation with state-of-the-art results on a set of ten not trivial runs from the KITTI dataset, including both urban/residential and highway/road scenarios. Although this paper represents a first step implementation towards a more general urban scene understanding framework, here we prove its flexibility of appli- cation to different intelligent vehicles applications. |

Other related sub-projects

People involved in this project:

- Dario Limongi (master thesis)

- Axel Furlan (as post-doc)

- Augusto Luis Ballardini (PhD thesis, post-doc)

- Daniele Cattaneo (master thesis, PhD)

- Sergio Cattaneo (master thesis)

- Matteo Vaghi (master thesis)

- Domenico G. Sorrenti (supervisor)